Friday, January 10, 2020

EU can look to 1783 for a way through Brexit

Viscount Ridley finds wisdom in British and American history

Britain’s strategy after losing the War of Independence was generous and helped trade thrive

Frans Timmermans, the vice-president of the European Commission, is singing a more friendly tune to Britain than his fellow commissioners: “We’re not going away and you will always be welcome to come back”. In a similar vein, “You’ll be back,” sings King George III’s character in the musical Hamilton, in a love song to his rebellious colonies, but adds: “And when push comes to shove/I will send a fully armed battalion to remind you of my love”. Though they have stopped short of sending battalions, too often the rulers of the European empire have seemed to be adopting a counterproductive hostility to their departing British colony.

When William Petty, Earl of Shelburne, became prime minister in July 1782 he faced roughly the same problem as the EU faces today: how generous an empire should be to a departing nation, in that case the 13 American colonies. As sore losers of the recent war, British ministers’ initial stance towards the Americans at the Paris treaty negotiations that began that year was condescending and tough: call them “colonials”, threaten to deny them access to British and Caribbean ports and refuse their demands for land beyond the Appalachians.

Shelburne realised this was a mistake, if only because Britain might need the Americans as allies in future conflicts with the French. But also, being a leading champion of free trade and an avid follower of Adam Smith, he refused to see the negotiation as a zero-sum game.

Being generous to the Americans would benefit both sides in the long run, Shelburne argued. So he changed tack and instructed Richard Oswald, the British delegate, to offer the astonished American delegates — Benjamin Franklin, John Adams and John Jay — a uniquely generous deal instead. The deal was agreed in 1783. It meant that the United States, as they would come to be known, would get access to British ports and would have open-ended ownership of the vast trans-Appalachian lands, including the extensive territory known as Illinois County, an area that had previously been deemed still British.

Although the Americans bit Oswald’s hand off, and peace treaties with the French, Dutch and Spanish soon followed, it made the sometimes devious and unreliable Shelburne unpopular with most of his fellow cabinet ministers and the British public. Charles James Fox criticised Shelburne for having made “concessions in every part of the globe without the least pretence of equivalent”. That is the eternal zero-sum cry of the mercantilist who does not think that gains from trade can be mutual. Shelburne lost office in March 1783 and never served as prime minister again.

Yet he was proved right. America’s subsequent prosperity helped Britain hugely by providing it with both a market in which to sell its manufactured goods and a hinterland to source the raw materials it needed. It was not all smooth sailing, of course, and there was the small matter in 1814 of British troops burning the White House in retaliation for the destruction of property in Canada during a brief sideshow of the Napoleonic wars. But in the long run the special Anglo-American relationship emerged and endured to immense mutual benefit.

So far the EU has taken a tough line on Brexit, hoping to make it so unpleasant that we change our minds. But this has not worked. Dismissing David Cameron’s request for comprehensive reform in 2015, weaponising Northern Ireland to defeat Theresa May’s soft Brexit in 2018 and taking advice from the likes of Tony Blair and Dominic Grieve on how to negotiate in order to get a second referendum have led the EU into a dead end and resulted in a “harder” Borisite Brexit than would otherwise have occurred.

Now that we are definitely leaving this month, will the EU continue to try an intransigent strategy as it seeks a new relationship with Britain? Given the failure of the British to change their minds thus far, the extinction of the possibility of a second referendum and the fact that the British economy has not stumbled since 2016, that might be unwise.

Thomas Kielinger, a veteran German journalist, argued recently that “the Europeans need to get their act together. They must create a relationship with Boris. They are not going to be able to be stubborn or refuse compromises.” The more difficult they make a free-trade deal, the more they will encourage British firms to look to America and Asia for business deals instead.

True, feelings are running high in Brussels where the demagoguery of the Brexit Party MEPs in the European Parliament no doubt causes some resentment, but feelings have been running high here too. For three years we have been subjected to the curl of Jean-Claude Juncker’s lip, the hiss of Donald Tusk’s lisp, the shrug of Michel Barnier’s shoulders and the snarl of Guy Verhofstadt’s tweets, not to mention the BBC’s Katya Adler perpetually explaining to us how much better Brussels’ negotiators are than ours. These have not endeared Britons to the project they are now leaving.

Mr Barnier seems tempted to repeat his tough stance, to show us what fools we have been to step outside the tent. But the governments of member states, and Mr Timmermans, are edging towards a more emollient line, mindful of the opportunity we will both have to be markets for each other’s goods and services.

Lord Shelburne did the right thing and got the sack. The lucky thing for President Ursula von der Leyen, as she contemplates whether to follow in his footsteps, is that, being unelected, she does not have to worry about losing office.

SOURCE

UK: Judges are appointed to dispense justice – not radical social policies

Great minds have argued for centuries about what constitutes a religion or a philosophy. Nowadays these matters can be decided by an employment tribunal in Norwich. Last week there, a judge, Robin Postle, ruled that ethical vegans (as opposed to people who are vegan just to lose weight) have the rights of a philosophical belief, which is a “protected characteristic” under the Equality Act 2010.

Just before Christmas, another employment tribunal – this time in London – found against Maya Forstater, a consultant for the Centre for Global Development think-tank. She had tweeted against government plans to allow people to self-identify as being of a different gender from their sex at birth. For this, she was dismissed.

She complained that her dismissal infringed her rights to philosophical beliefs. No, said the judge, James Tayler: her view of sex and gender was of an “absolutist nature” and “incompatible with human dignity and the fundamental rights of others”.

I see much more work for lawyers in this. After all, the idea that your sex is biologically determined is common to many religions and many non-religious belief systems everywhere. Is that idea now actually illegal? If so, how are religious and philosophical rights protected under the Equality Act?

It is important to understand that such rights do not merely uphold – as they should – the freedom to hold beliefs such as veganism. They affect a myriad of everyday things which are troublesome for the rest of us. Will it now be a duty in all workplaces to provide vegan food and to make sure that vegan employees do not have to handle anything made from animals?

Once upon a time, a forgotten concept known as common sense would have ensured that vegans did not seek jobs in butchers’ shops. But now that they have acquired rights under law, how long before they get hired for the meat counter in Sainsbury’s and then protest that the management infringes their rights when it tells them to slice bacon?

How do employment judges know the answers to these vexed questions? Judge Tayler referred repeatedly to something called the Equal Treatment Bench Book, a sort of semi-official guidance. This book, I notice, goes well beyond the law. It embraces, for instance, the concept of Islamophobia, although it currently has no legal definition. Judge Tayler is also a “Diversity and Community Relations Judge”. Did you know there are scores of them?

The more one looks, the more one finds that a judicial career can now be a vehicle for those pursuing radical social and political causes. The idea of most citizens that it is simply about dispensing justice fairly is beginning to look woefully 20th-century.

SOURCE

Big government earns distrust

WHEN DWIGHT EISENHOWER and John Kennedy were in the White House, about 75 percent of Americans trusted the federal government to do the right thing most of the time. That number began to plummet during Lyndon Johnson's administration and by Election Day 1968 had fallen to the low 60s. It continued to fall under Richard Nixon, and by the time Jimmy Carter's term as president had ended, public trust in the federal government was down to just 30 percent. It briefly spiked above 50 percent after 9/11, then sank even lower. Barack Obama's presidency saw public trust fall to the teens, which is where it remains under Donald Trump.

Why did Americans stop trusting their government? There is no single answer, of course. But perhaps part of the reason is that the size and scope of the federal establishment metastasized far beyond the level at which it could maintain a reputation for fairness and reliability. Since the 1960s and 1970s, Washington has insisted on doing more, regulating more, intervening more, spending more, and micromanaging more than ever before. As the view increasingly took hold that the government is obliged to solve every problem, its inevitable failure to do so became impossible to ignore. The bigger government grew and the more it intruded in citizens' lives, the sourer the taste it left in many mouths.

Americans embark on this presidential election year with their trust in Washington's abilities at or near an all-time low. Yet most of the Democratic politicians running for president want the government to be given even greater powers. As vast as the federal behemoth has grown, Elizabeth Warren, Bernie Sanders, Pete Buttigieg, and the others want it to grow vaster. In countless areas of life — medical costs, energy, college loans, gun control, labor unions, child care, and so many others — they call for more regulation, greater spending, new entitlements. They propose sweeping economic transformations and radical social shifts. And never do they voice even a qualm about the wisdom of conferring more authority and dominance on a bloated government that most Americans say they don't trust.

The same politicians and political activists who mistrust so many people in the private sector feel no such wariness about the public sector. Everywhere they look, these politicians see citizens who cannot be trusted to make decisions for themselves: employers, gun owners, Big Tech executives, oil producers, health insurers, Wall Street investors, even Christian bakers and florists. Despite all the evidence to the contrary, however, they never seem to lose their faith — their almost religious faith — in the fundamental wisdom and benevolence of government regulators.

Atheists sometimes scorn religious believers as irrational for having faith in an omniscient God. But while God's existence can be neither proved nor disproved, every day's news brings fresh evidence of incompetence, fraud, waste, and dishonesty in government: Presidential impeachments. Police brutality. City Hall shakedowns. Campaign-finance chicanery. Men and women who work for the state may not be more prone to venality, bias, or screwing up than other people. But surely they are no less so.

Politicians and public officials are only human. They aren't endowed with superhuman powers or godlike insights. They cannot foretell the future or avoid error. Their moral judgments are no better, on average, than those of the taxpayers who support them. And their schemes and projects are about as likely to succeed as most endeavors are.

An estimated 95 percent of product launches fizzle. Half of all new hires don't work out. Twenty percent of small businesses go bust within a year. If the odds against success are so high even in such limited undertakings, how likely is it that the massive plans touted by candidates like Warren, who has issued more than 60 blueprints, many quite radical, for reconstructing American society, would work out as planned? Even if you find her (or any other candidate's) ideas appealing in the abstract, isn't it overwhelmingly likely that they would fail in the real world? And when that happened, wouldn't the result be even more cynicism toward the federal government?

This month marks the 190th anniversary of an essay every candidate for office ought to read. In January 1830, the British historian and statesman Thomas Babington Macaulay wrote a review of a book on politics and history by Robert Southey, England's poet laureate. Macaulay, a classical liberal, scathingly rejected Southey's statist worldview:

"He conceives that the business of the magistrate is, not merely to see that the persons and property of the people are secure from attack, but that he ought to be a jack-of-all-trades, architect, engineer, schoolmaster, merchant, theologian ... His principle is ... that no man can do anything so well for himself as his rulers ... can do it for him, and that a government approaches nearer and nearer to perfection, in proportion as it interferes more and more with the habits and notions of individuals."

It may be appealing, wrote Macaulay, to imagine that a wise government should guide the people. "But is there any reason for believing that a government is more likely to lead the people in the right way than the people to fall into the right way of themselves?"

Macaulay's plea for prudence and modesty in government is even more relevant in our time than it was in his — and even more likely to go unheeded. Never has America's political class been so sure that it knows exactly how to engineer society. And never have Americans trusted their government less.

SOURCE

Cities move to ban dollar stores, blaming them for residents’ poor diets

About 20 years ago, academic researchers began describing poor urban neighborhoods without supermarkets as “food deserts.” The term captured the attention of elected officials, activists, and the media. They mapped these nutritional wastelands, blamed them on the rise of suburban shopping centers and the decline of mass transit, linked them to chronic health problems suffered by the poor, and encouraged government subsidies to lure food stores to these communities. Despite these efforts, which led to hundreds of new stores opening around the country, community health outcomes haven’t changed significantly, and activists think that they know why. The culprits, they say, are the dollar-discount stores in poor neighborhoods that—or so they claim—drive out supermarkets and sell junk food. Never mind that compelling research suggests a lack of supermarkets isn’t the problem—let alone the popularity of discount stores.

This latest front in the food wars has emerged over the last few years. Communities like Oklahoma City, Tulsa, Fort Worth, Birmingham, and Georgia’s DeKalb County have passed restrictions on dollar stores, prompting numerous other communities to consider similar curbs. New laws and zoning regulations limit how many of these stores can open, and some require those already in place to sell fresh food. Behind the sudden disdain for these retailers—typically discount variety stores smaller than 10,000 square feet—are claims by advocacy groups that they saturate poor neighborhoods with cheap, over-processed food, undercutting other retailers and lowering the quality of offerings in poorer communities. An analyst for the Center for Science in the Public Interest, for instance, argues that, “When you have so many dollar stores in one neighborhood, there’s no incentive for a full-service grocery store to come in.” Other critics, like the Institute for Local Self-Reliance, go further, contending that dollar stores, led by the giant Dollar Tree and Dollar General chains, sustain poverty by making neighborhoods seem run-down. “It’s a recipe for locking in poverty rather than reducing it,” an institute representative told the Washington Post early this year.

Such claims are like salty potato chips for reporters starved for a good angle on how big businesses exploit the poor. A Washington Post headline described how these stores “storm” into cities. A banner in Fast Company declared, “Why dollar stores are bad business for the neighborhoods they open in.” An Atlanta Journal-Constitution article on the stores highlighted a quote from a local official: “We don’t need them on every corner.”

The idea that dollar stores are invaders ignores the fact that these retailers are expanding in neighborhoods that want them. And the notion that the stores lower the quality of retailers in an area overlooks how they’ve become popular in prosperous neighborhoods, too. “What’s driving the growth [of dollar stores] is affluent households,” an expert with consumer-research outfit Nielsen Company told the New York Times Magazine, in a story entitled “The Dollar Store Economy,” published before these stores became the latest bête noire of activists.

Recent research undermines the argument that a lack of fresh, healthy food is to blame for unhealthy diets. In a paper published in the Quarterly Journal of Economics, three economists chart grocery purchases in 10,000 households located in former food deserts, where new supermarkets have since opened. They found that people didn’t buy healthier food when they started shopping at a new local supermarket. “We can statistically conclude that the effect on healthy eating from opening new supermarkets was negligible at best,” they wrote. In other words, the food-desert narrative—which suggests that better food choices motivate people to eat better—is fundamentally incorrect. “In the modern economy, stores have become amazingly good at selling us exactly the kinds of things we want to buy,” the researchers write. In other words, “lower demand for healthy food is what causes the lack of supply.”

Combatting the ill effects of a bad diet involves educating people to change their eating habits. That’s a more complicated project than banning dollar stores. Subsidizing the purchase of fresh fruits and vegetables through the federal food-stamp program and working harder to encourage kids to eat better—as Michelle Obama tried to do with her Let’s Move! campaign—are among the economists’ suggestions for improving the nation’s diet. That’s not the kind of thing that generates sensational headlines. But it makes a lot more sense than banning dollar stores.

SOURCE

******************************

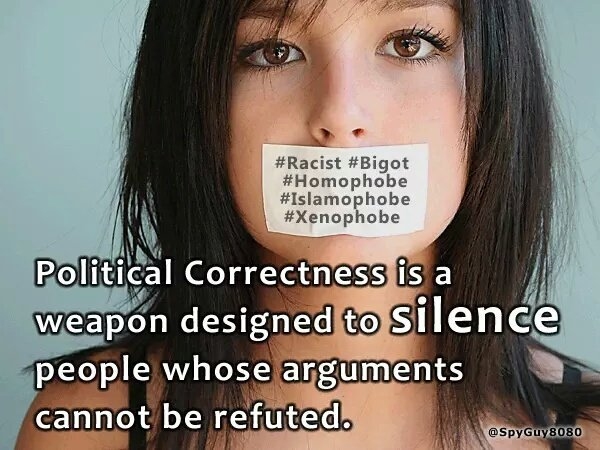

Political correctness is most pervasive in universities and colleges but I rarely report the incidents concerned here as I have a separate blog for educational matters.

American "liberals" often deny being Leftists and say that they are very different from the Communist rulers of other countries. The only real difference, however, is how much power they have. In America, their power is limited by democracy. To see what they WOULD be like with more power, look at where they ARE already very powerful: in America's educational system -- particularly in the universities and colleges. They show there the same respect for free-speech and political diversity that Stalin did: None. So look to the colleges to see what the whole country would be like if "liberals" had their way. It would be a dictatorship.

For more postings from me, see TONGUE-TIED, GREENIE WATCH, EDUCATION WATCH INTERNATIONAL, AUSTRALIAN POLITICS and DISSECTING LEFTISM. My Home Pages are here or here or here. Email me (John Ray) here. Email me (John Ray) here.

************************************

Subscribe to:

Post Comments (Atom)

/>

/> Kristina Pimenova, once said to be the most beautiful girl in the world. Note blue eyes and blonde hair

Kristina Pimenova, once said to be the most beautiful girl in the world. Note blue eyes and blonde hair

Bliss: What every woman wants: to feel safe and secure in the arms of her man. Robert Irwin and Rorie Buckey. See

Bliss: What every woman wants: to feel safe and secure in the arms of her man. Robert Irwin and Rorie Buckey. See

No comments:

Post a Comment